DataView.in Empowering Technophiles and Aspiring Data Scientists to Shape the Future

Unleash your potential with DataView, the ultimate web-based platform tailored for technophiles, students, and freshers passionate about skyrocketing their careers in Data Science. Our platform provides a treasure trove of in-depth blogs, premium courses, engaging videos, and dynamic discussions to unlock knowledge, sharpen skills, and seize unparalleled opportunities.

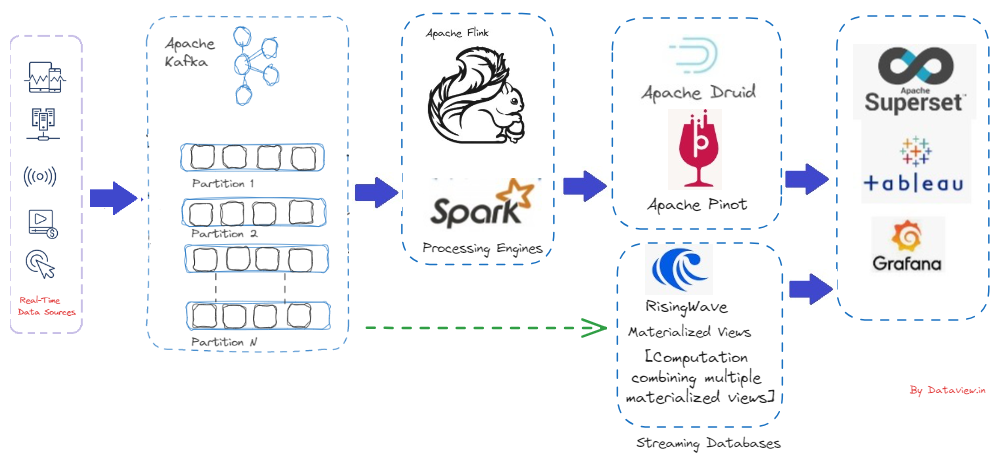

Dive into real-world scenarios with hands-on use case analysis, followed by the design and development of cutting-edge proof-of-concept (POC) projects. Whether it’s tackling real-time streaming data analysis, designing digital payment gateways, or managing complex architectures, DataView prepares you for the challenges of the modern digital landscape.

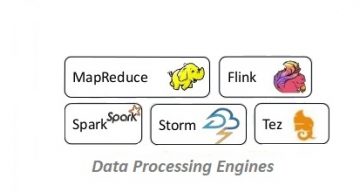

Join a community focused on mastering the tools and techniques adopted by global IT leaders and multinational corporations. Our platform aligns learning with key business goals, helping you close critical skills gaps in areas such as big data security, ultra-fast data processing engines, and cluster management in distributed environments.

In a world of exponential digital data growth, staying ahead means more than just understanding technology. It’s about creating solutions that solve real problems, building skills that drive innovation, and fostering knowledge that propels businesses forward. DataView equips you with the right tools, the right mindset, and the right skills to lead in a competitive marketplace.

We also provide on-demand physical training and host interactive workshops, making sure learning is accessible and tailored to your needs. With DataView, you don’t just learn—you transform into a trailblazer of the digital age.

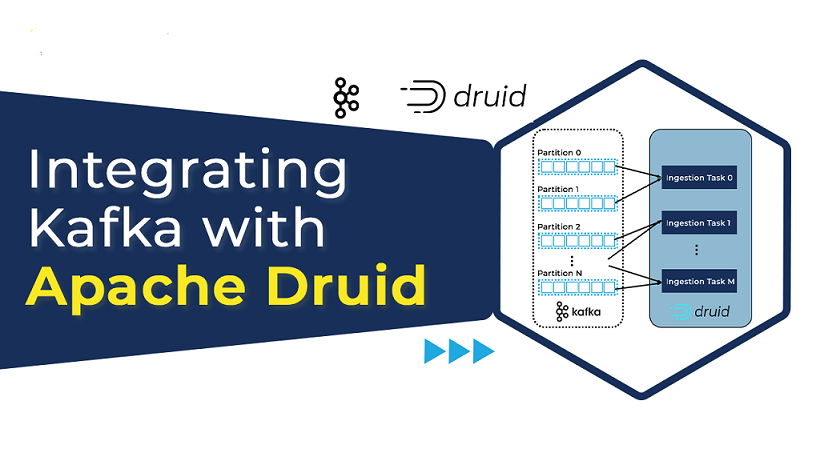

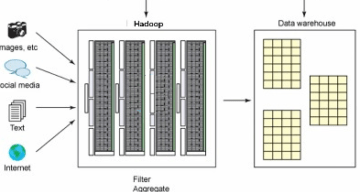

Technology Platforms for Big Data processing and analyzing:

Download Our Free E-Books!! |