Exploring Telemetry: Apache Kafka’s Role in Telemetry Data Management with OpenTelemetry as a Fulcrum

With the use of telemetry, data can be remotely measured and transmitted from multiple sources to a single place for control, analysis, and monitoring. The process of gathering data from instruments, sensors, and other devices situated in inaccessible or remote areas is known as telemetry. Depending on the application, these sensors can monitor a wide range of characteristics, including temperature, pressure, velocity, location, vibration, and any other pertinent data. After being gathered, the data is sent from far-off places to a monitoring system or central receiving station. There are several ways that this transmission can happen: wireless communication (such as radio frequency, cellular networks, or satellite links), wired connections (like cables or fiber optics), or even acoustic or optical signals.

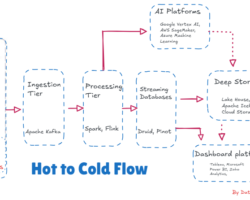

The sent data is received and processed at the central receiving station. This could entail arranging the data for additional analysis, transforming analog data into digital format, and decoding the delivered signals. The received telemetry data is typically stored in databases or data repositories for analysis and archival purposes. Analysis of telemetry data can involve real-time monitoring, trend analysis, predictive analytics, anomaly detection, or any other form of data processing to derive insights and make informed decisions. To make the telemetry data analysis understandable and useful for operators, engineers, or decision-makers, it might be presented as charts, graphs, dashboards, or reports. Furthermore, control methods that enable operators to remotely control or modify settings in response to data analysis may also be included in telemetry systems.

Here are several reasons why telemetry data matters when it comes to Apache Kafka.

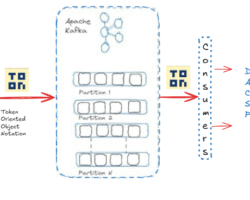

- Scalability – Owing to its ability to handle massive amounts of real-time data streams in an efficient manner, Apache Kafka is essential for managing telemetry data. Telemetry data often comes in large volumes, especially in scenarios like IoT deployments or real-time monitoring of industrial systems. Kafka’s distributed architecture allows it to scale horizontally, meaning it can handle increasing data loads by simply adding more machines to the cluster. This scalability ensures that telemetry systems can grow seamlessly to accommodate larger data streams without sacrificing performance.

- High Throughput and Low Latency – The significance of Apache Kafka lies in its capacity to scale, achieve high throughput, minimize latency, maintain dependability, and integrate seamlessly with streaming analytics frameworks when it comes to telemetry data. For the purpose of absorbing, analyzing, and distributing real-time telemetry data, it provides a strong and effective platform that enables businesses to quickly get insights and make informed decisions.

- Reliability + Durability – Even in the case of system failures, telemetry data should be saved because it frequently includes insightful information. Replication and fault tolerance are two features offered by Kafka that guarantee data is replicated across several brokers and can withstand node failures without losing data. Maintaining data integrity and making sure telemetry systems can run constantly without interruptions depend on this durability and dependability.

An open-source observability platform called OpenTelemetry standardizes the gathering and handling of telemetry data from distributed systems, like microservices and apps, to learn more about their behavior and performance. OpenTelemetry defines unified APIs for working with telemetry data, making it easier for developers to work across different languages and environments. These APIs provide consistent interfaces for generating and working with metrics, traces, and logs, regardless of the underlying implementation details.

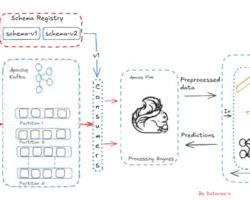

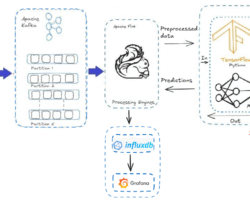

Within the observability and data processing environment, Apache Kafka and OpenTelemetry play distinct but complementary roles. Even though they deal with various facets of managing telemetry data, they can be combined to offer a complete observability solution for distributed systems. As we know Apache Kafka is a distributed messaging system, it is quite difficult to track how messages are moved around. This is where OpenTelemetry comes into the picture. It provides multiple instrumentation libraries for adding tracing to messaging-based applications.

Suppose we’re operating an application that utilizes the Kafka clients API for both message production and consumption. For the sake of simplicity, let’s exclude any additional tracing within our business logic. Instead, our focus lies solely on tracing the Kafka-related processes. Specifically, we aim to monitor the production and consumption of messages through Kafka clients. To achieve this, we can deploy an agent alongside the application. This agent intercepts incoming and outgoing messages, enriching them with tracing information.

The more straightforward and automated method involves integrating tracing into our application without modifying or augmenting the existing codebase. Furthermore, there’s no necessity to introduce dependencies on OpenTelemetry-specific libraries. This can be accomplished through the utilization of the OpenTelemetry agent, which operates in parallel with our application. Its role is to incorporate the necessary logic for tracing messages transmitted to and received from an Apache Kafka cluster.

Apache Kafka represents just one of the messaging platforms available for facilitating communication among microservices within a distributed system. Monitoring the exchange of messages and resolving issues can be exceedingly intricate. This is precisely where OpenTelemetry steps in, placing the power of tracing directly in our hands. From a high-level perspective, we’ve observed how the Kafka clients instrumentation library streamlines the process of incorporating tracing details into Kafka-based applications.

Hope you have enjoyed this read. Please like and share if you feel this composition is valuable.

Ref:- https://opentelemetry.io/

Written by

Gautam Goswami ![]()

Can be reached for real-time POC development and hands-on technical training at [email protected]. Besides, to design, develop just as help in any Hadoop/Big Data handling related task, Apache Kafka, Streaming Data etc. Gautam is a advisor and furthermore an Educator as well. Before that, he filled in as Sr. Technical Architect in different technologies and business space across numerous nations.

He is energetic about sharing information through blogs, preparing workshops on different Big Data related innovations, systems and related technologies.