A Credible approach of Big Data processing and subsequent analysis on telecom data to minimize crime, combat terrorism, unsocial activities, etc.

– To find the duration of each call made from a particular number.

– The numbers from which the calls are being made.(calling party)

– The numbers receiving the calls (called party)

– When the call started (date and time)

– How long the call was (duration)

– The identifier of the telephone exchange writing the record

– Call type (voice, SMS, etc.)

– If the SIM card has been destroyed and same phone was used with new SIM card

– In case, phone and SIM both were replaced with new ones and calls made to the contacts of previous phone or SIM,

with call patterns, the caller party can be tracked.

– Capturing maximum number of calls, duration that has been made to a particular number.

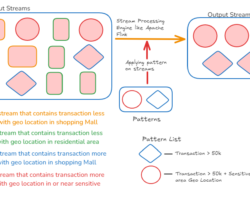

– Live location capture of suspected number

– Details of past locations of suspected number along with the patterns of visits.

– Which number and how many times the call has been made during a day.

– Where that person was/is when the call has been made.

– Where the person is currently based on the geo location feed.

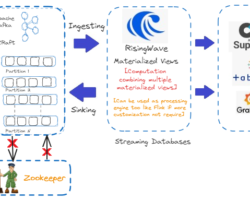

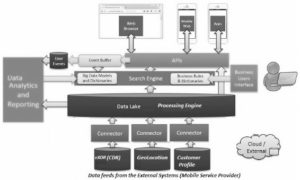

To counter the business as well as technical challenges to achieve the above by processing massive volume of CDR, GPS data, we can setup the end to end multi node Hadoop cluster to fit the petabytes of data and process the same. We can define mappings for each CDR file and write component to process each file in a distributed manner with 0 data loss and apply DQ check to extract good records from hundreds of GB/TB data generated continuously from multiple interfaces including mobile towers. By developing module to ingest geo-location feed and customer data (provided by the ISP) on HDFS multi node cluster and subsequent immediate processing of data, we can achieve geo-fencing feature which helps security agency to conduct behavioral analysis of the suspected person/groups etc. Besides, using QlikView reporting tool, gathering of the criminals/unsocial persons can be plotted to avoid future crimes etc. This high level architectural diagram can be leveraged to implement the entire solution.

We need to have corrupt data purification mechanism before ingestion into HDFS multi node cluster. Data quality plays a major factor in delivering the final dashboard. By collecting good quality of data from telecom operators, we can present final dashboard to security/investigating agency. Ideally Telecom Company or mobile service provider filter data feeds before exporting to the third party system or cloud environment for processing.

Written by

Gautam Goswami ![]()

Can be contacted for real time POC development and hands-on technical training. Also to develop/support any Hadoop related project. Email:- [email protected]. Gautam is a consultant as well as Educator. Prior to that, he worked as Sr. Technical Architect in multiple technologies and business domain. Currently, he is specializing in Big Data processing and analysis, Data lake creation, architecture etc. using HDFS. Besides, involved in HDFS maintenance and loading of multiple types of data from different sources, Design and development of real time use case development on client/customer demands to demonstrate how data can be leveraged for business transformation, profitability etc. He is passionate about sharing knowledge through blogs, seminars, presentations etc. on various Big Data related technologies, methodologies, real time projects with their architecture /design, multiple procedure of huge volume data ingestion, basic data lake creation etc.