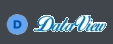

Network Topology to create Multi Node Hybrid cluster for Hadoop Installation

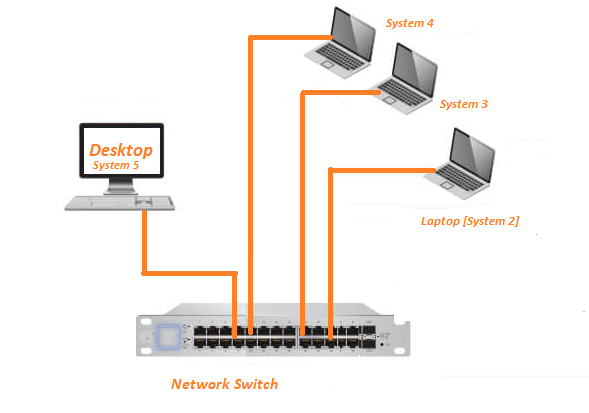

The aim of this article is to provide an outline for creating network topology for Hadoop installation in multi node hybrid cluster with limited available hardware resources. This cluster would be beneficial for learning Hadoop, lower volume of unstructured data processing using various engines etc. Before the cluster setup, we installed Hadoop on a single node cluster running on Ubuntu 14.04 on top of Windows 10 using VMware workstation player. Later we have copied the .vmx file into multiple systems which are identified to run Hadoop’s Data node on VMware as per the hardware resource availability. This helps to avoid repeated installation and time. Configuration of data nodes with name node will be explained in a separate post/article. Here is the list of hardware/system details used to create this network topology.

1. D-Link DES-1005C 10/100 Network Switch

2. Dell Inspiron 5458 Laptop with 16 GB RAM and Windows 10 as host operating system

3. Dell Inspiron 1525 Laptop with 2 GB RAM and Ubuntu 14.04 as operating system

4. Lenovo B40-80 Laptop with 4 GB RAM and Windows 10 as host operating system

5. Desktop with 8 GB RAM and Windows 7 Professional as host operating system

6. Straight-through cables

Practicalities prior to creating the cluster

VMware Workstation 12 player has installed in system no 2 and 3 respectively and eventually installed OS Ubuntu 14.04 on top of it as guest OS. Similarly VMware Workstation 7.x player has installed on system no 5 and subsequently Ubuntu 14.04 on top of it as guest OS. We disabled Windows, Anti-Virus firewall on all the systems. Enabling a firewall denies all data packets to entering and exiting the network restricted and eventually prevents systems to communicate with each other in the cluster. We scanned each system individually prior to networking using strong Anti-virus software to eliminate malware, virus etc. Choosing a network switch over router to create the cluster helped us to avoid internet connectivity. Otherwise, there could be a potential threat of malware/virus infection since we already disabled firewall. Typically, for connecting different types of devices, straight-through cable can be used like PC to Switch, PC to Router, Router to Switch. So we have used straight-through cable .There is a thumb rule that we need to follow in order to make intercommunication happen/LAN among the systems and VM running on different systems.

Step 1:- Ethernet LAN setup using Network Switch

Step 2:- Network Adapter setting on the VM Player

Step 3:- Configure Internet Protocol Version 4 (TCP/IPv4) on Windows

Step 4:- Assign static IP to Ubuntu 14.04 as well as on top of VMware workstation players

Execution Steps in sequence

Step -1:- Ethernet LAN setup using Network Switch

Most modern computers have built-in Ethernet adapters with the port located on the back or side of the machine. Just need to locate the Ethernet port on individual system. Connect an Ethernet cable (Cat-5e) between individual system and the network switch. Locate an open Ethernet port on the network switch and repeat this process to complete the connection among all the systems with network switch.

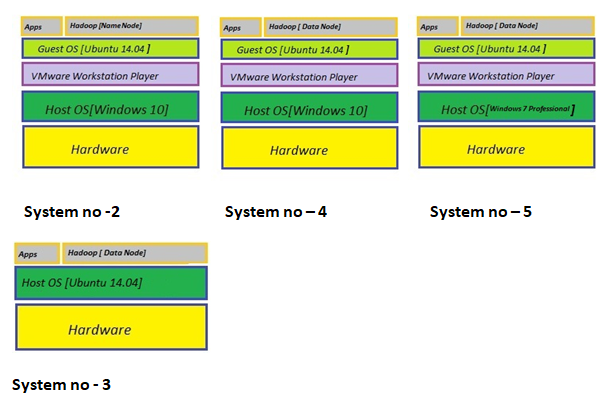

Step-2:- Network Adapter setting on the VM Players running on top of Windows 10 and Windows 7 Professions

As mentioned above, we have copied the .vmx file of single node Hadoop cluster into the Systems no 2, 4 as well as 5. Prior to that, we installed VMware workstation player 12.x and 7.x on those systems. Before playing the virtual machine, Network Adapter setting should to bridge (Automatic). By default, it always set to NAT.

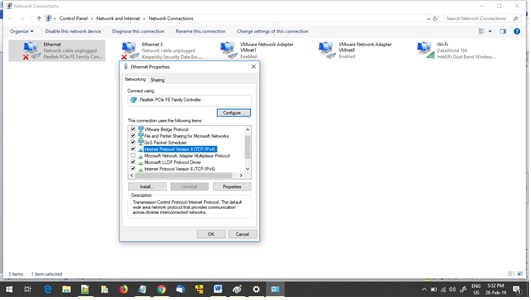

Step-3:- Configure Internet Protocol Version 4 (TCP/IPv4) on Windows

To configure IPv4 in order to assign static IP address on all the system running on Windows 10 and Windows 7 professional, we need to navigate to “Networking” tab on the “Ethernet Properties” dialog via “Control Panel -> “Network and Internet” -> Network Connections”

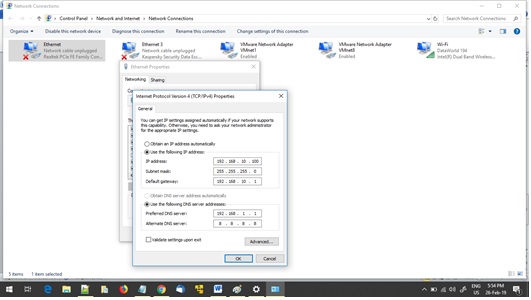

Select and click check box of “Internet Protocol Version 4 (TCP/IPv4)” and click on “Properties” button. One more dialog appears with “Obtain an IP Address automatically” radio button checked by default.

Uncheck and check “Use the following IP Address”. Last 3 digit of IP Address for each system should be different (For example 192.168.10.XXX). Subnet mask [ 255.255.255.0] and Default gateway should be same across all the system running Windows OS. Here we have used Default gateway as 192.168.10.1. Similarly “Preferred DNS Server” and “Alternate DNS Server” set as “192.168.1.1” & “8.8.8.8”. After completion, Switched on the Network Switch and verified System 2, System 3 and System 5 are accessible with each other by pinging IP Address from command prompt.

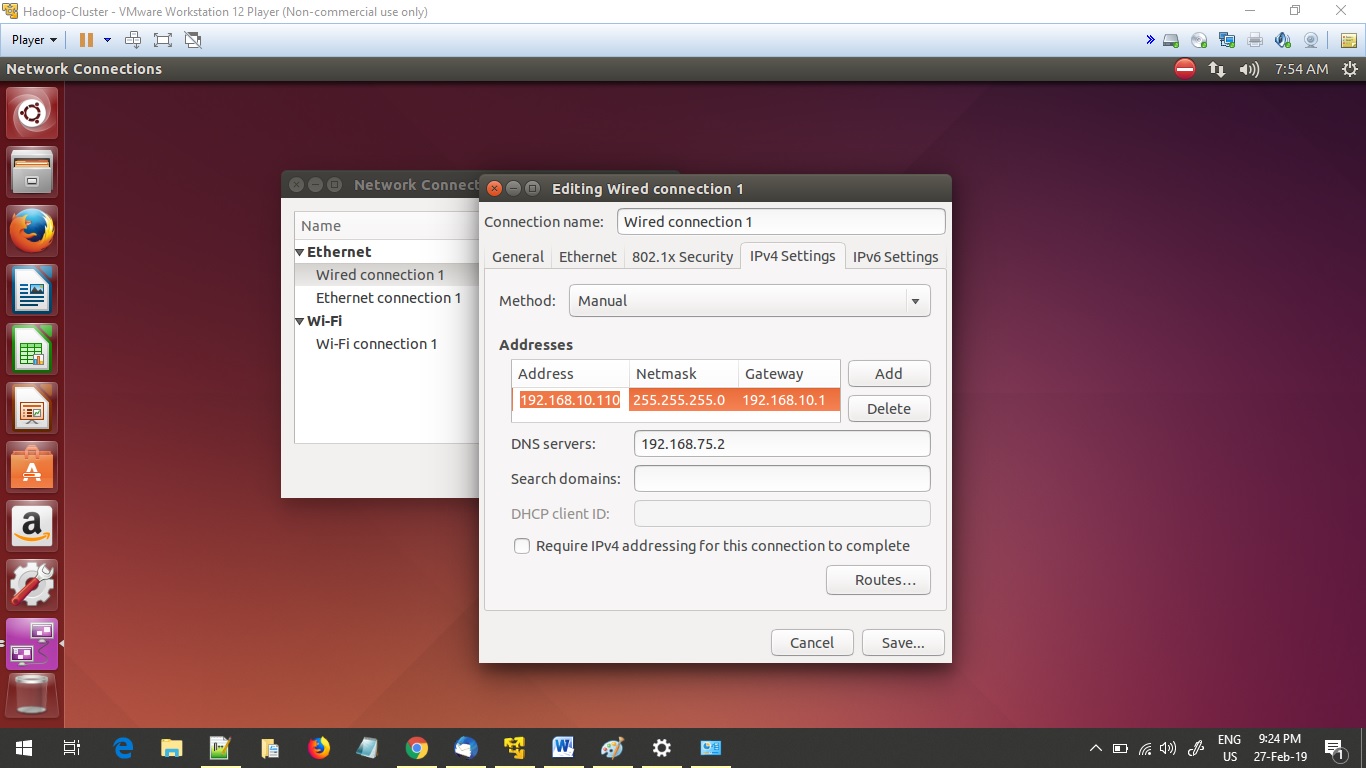

Step 4:- Assign static IP to Ubuntu 14.04 as well as on top of VMware workstation players

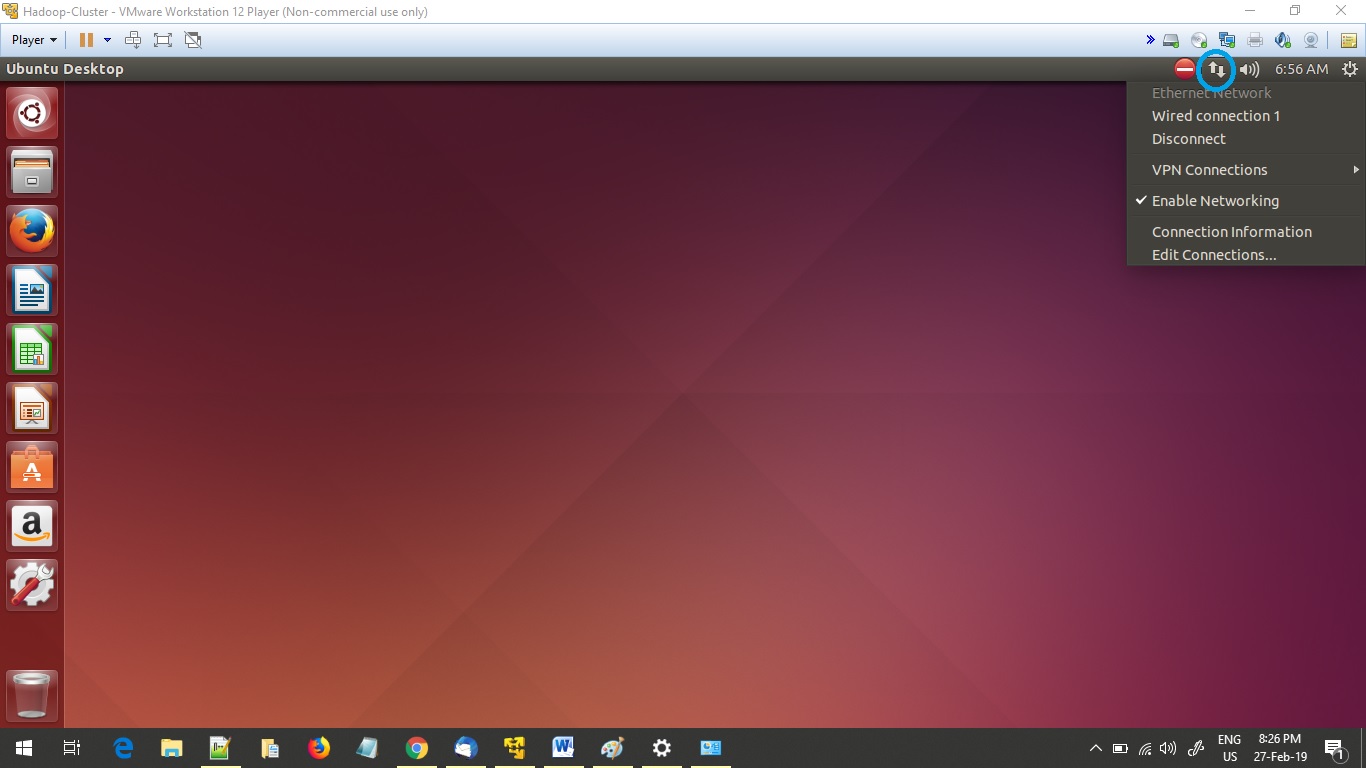

Even though System 3 has less RAM (2 GB) still GUI of Ubuntu 14.04 is completely available because it is not running on VMware workstation player. So we used GUI based method to assign the static IP Address.

After login to the System 3, navigated to “Edit Connections” by right clicked on the icon left next to Speaker icon. Sometimes it won’t appear as two arrows opposite to each other.

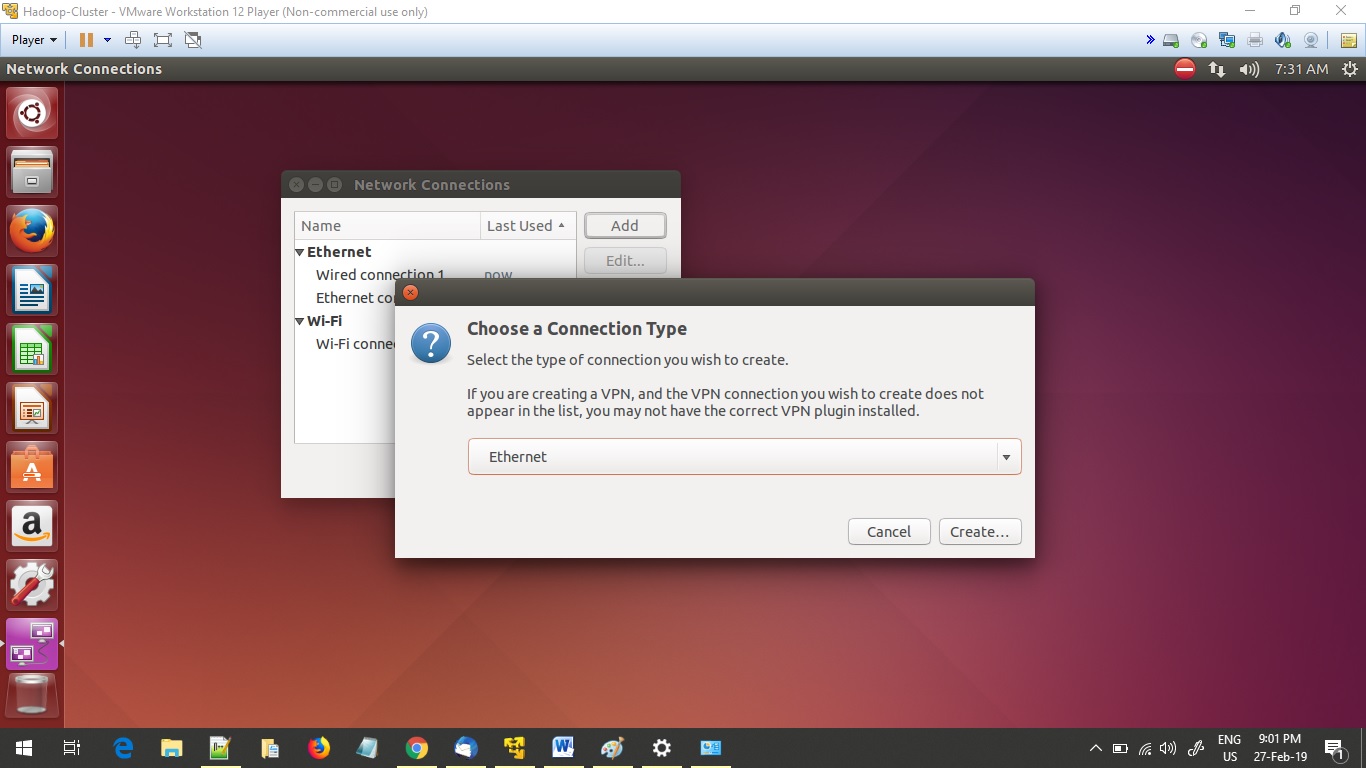

Click on “Add” and “Create” button by selecting “Ethernet”

Navigate to “IPv4 Settings” tab and select “Manual” from “Method” drop down. After that manually enter the value of “Address”, “Netmask”, “Gateway” and “DNS server”. We need to make sure the value of “ Netmask”, and “Gateway” should be same across all the systems including OS Ubuntu 14.04 running as guest OS on VMware workstation player.

Following are the steps to assign static IP Address for the System no 4 and 5 that running on Ubuntu 14.04 inside the VMware workstation player 12.x and 7.x respectively. GUI of Ubuntu 14.04 was not available as less RAM located to the VMware workstation players (2 GB and 6 GB). So we continued through terminal and VI Editor.

a. By default root user account is disabled for login in Ubuntu. To enable, login to the system and open the terminal.

b. Type sudo su –

c. Will ask for account password. Type and press enter

d. Open the /etc/network/interfaces in Vi Editor from the terminal

vi /etc/network/interfaces

and would appear as

auto eth0

iface eth0 inet dhcp

In some system eth0 might be display as lo

And current entry we need to change as

auto eth0

iface eth0 inet static

address 192.168.10.110

netmask 255.255.255.0

gateway 192.168.10.1

network and dns-nameservers entry are optional so we skipped those. The entry value of “gateway” should be same as we entered in all the systems running on Windows. Last three digits in address should be unique. We followed the pattern of assigning IP Address as 192.168.10.XXX. and maintained the range between 100 and 200.

e. Save and exit from Vi editor by type :wq!

f. Restart the systems.

g. After login again, by typing “ifconfig” on the terminal we can verify the changes.

After completion of all the above mentioned steps successfully, we verified connectivity by pinging in following sequence

1. Host to Guest OS and vice versa where VM player is running in each individual System.

2. Host OS (Windows 10 and Windows 7 professional on System 2,4 and 5) to System 3 and similarly vice versa

3. Among VMware workstation players running on the System 2, 4 and 5. Also with their Host OS from VMware workstation players and vice versa.

4. From System 3 to VMware workstation players running on the System 2, 4 & 5 and similarly vice versa.

Hope, this article will add additional inputs to design network topology for multi node Hybrid Hadoop cluster on limited hardware resources. This type of cluster can be utilized by small company or training institute for learning, knowledge enhancement and small volume of data processing. Also get relief from paying revenue to cloud service providers.

Written by

Gautam Goswami

Can be contacted for real time POC development and hands-on technical training. Also to develop/support any Hadoop related project. Email:- [email protected]. Gautam is a consultant as well as Educator. Prior to that, he worked as Sr. Technical Architect in multiple technologies and business domain. Currently, he is specializing in Big Data processing and analysis, Data lake creation, architecture etc. using HDFS. Besides, involved in HDFS maintenance and loading of multiple types of data from different sources, Design and development of real time use case development on client/customer demands to demonstrate how data can be leveraged for business transformation, profitability etc. He is passionate about sharing knowledge through blogs, seminars, presentations etc. on various Big Data related technologies, methodologies, real time projects with their architecture /design, multiple procedure of huge volume data ingestion, basic data lake creation etc.