AI on the Fly: Real-Time Data Streaming from Apache Kafka To Live Dashboards

In the current fast-paced digital age, many data sources generate an unending flow of information, a never-ending torrent of facts and figures that, while perplexing when examined separately, provide profound insights when examined together. Stream processing can be useful in this situation. It fills the void between real-time data collecting and actionable insights. It’s a data processing practice that handles continuous data streams from an array of sources. Real-time data streaming has started playing an important impact on modern AI models for applications that need quick decisions. We can consider a few examples where AI models need to deliver instant decisions like self-driving cars, fraud in stock market trading, and smart factories that utilize technology like sensors, robots, and data analytics to automate and optimize manufacturing processes.

Real-time data streaming plays a key role for AI models as it allows them to handle and respond to data as it comes in, instead of just using old fixed datasets. This speed matters a lot for tasks where quick choices can make a big difference, like spotting fraud in money transfers, tweaking suggestions in online shops, or steering self-driving cars, as said above as an example of AI models that need to deliver instant decisions. By leveraging real-time data, AI models can maintain a current understanding of their environment, adapt quickly to changes, and improve performance through continuous updates. What’s more, real-time streaming helps AI work in edge computing and IoT setups where quick processing is often needed. Without the ability to work in real-time, AI systems might become old news, slow to react, and less useful in fast-moving, data-heavy settings.

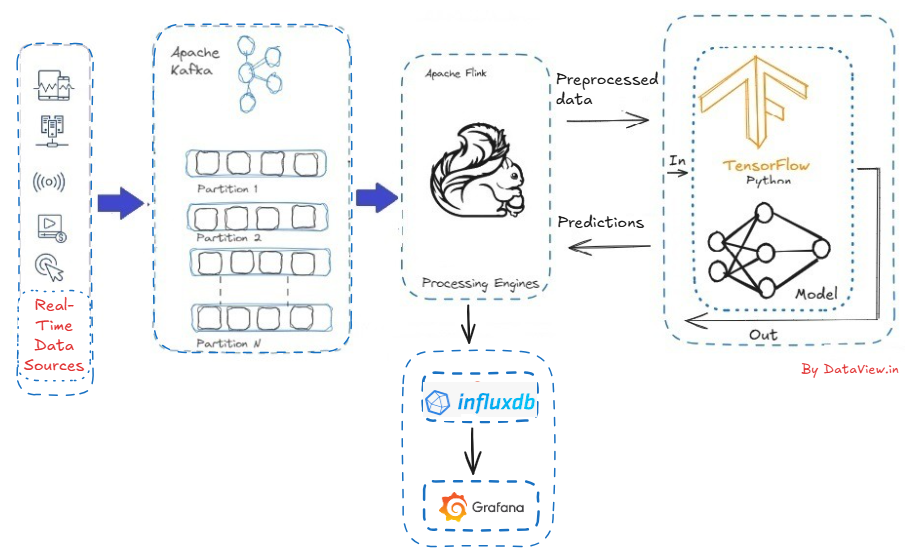

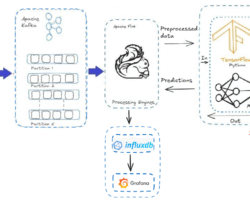

From Source to Streams

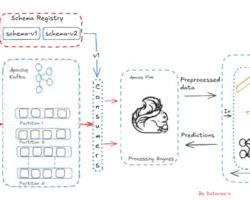

Over the past few years, Apache Kafka has emerged as the leading standard for streaming data. Fast-forward to the present day, Kafka has achieved ubiquity, being adopted by at least 80% of the Fortune 100. Kafka’s architecture versatility makes it exceptionally suitable for streaming data at a vast ‘internet’ scale, ensuring fault tolerance and data consistency crucial for supporting mission-critical applications. Flink is a high-throughput, unified batch and stream processing engine, renowned for its capability to handle continuous data streams at scale. It seamlessly integrates with Kafka and offers robust support for exactly-once semantics, ensuring each event is processed precisely once, even amidst system failures. Flink emerges as a natural choice as a stream processor for Kafka. While Apache Flink enjoys significant success and popularity as a tool for real-time data processing, accessing sufficient resources and current examples for learning Flink can be challenging.

Turning Streams into Insights

After ingesting real-time data stream into the multi-node Apache Kafka cluster and subsequently integrating with the Flink cluster, the ingested streaming data can be allowed for the enhancement, filtering, aggregation and alteration. This has a significant impact on AI systems, as it enables real-time feature engineering to take place before feeding the data to models. As AI systems, we can consider TensorFlow, which is an open-source platform and framework for machine learning, that includes libraries and tools based on Python and Java. It is designed with the objective of training machine learning and deep learning models on data. Here is the pseudocode in Java that demonstrates how we can pass processed stream data from Apache Flink to a TensorFlow AI model. We can use the DataStream API of Flink to ingest streaming data from Kafka’s topic and subsequently parse and process the data. Eventually, send processed data to TensorFlow for prediction.

What’s next from TensorFlow?

Moving the predicted model from TensorFlow to Grafana for dynamic visualization isn’t straightforward. We need to take a few steps in between. This is because Grafana is a multi-platform open-source analytics and interactive visualization web application. It doesn’t work with machine learning models. Instead, it connects to databases that store data over time. For continuous predictions, we can use InfluxDB, TimescaleDB (PostgreSQL extension), or any other vendor-specific Time-series databases. This approach makes it ideal for deploying and tracking models in production that support real-time monitoring, historical trend analysis, and ML model observability.

Conclusion

In today’s world, where we are concerned about every millisecond count, closing the gap between AI and real-time data isn’t just a technical achievement. It gives us an edge over competitors. When we stream data through Apache Kafka and display insights right away on live dashboards, we are not only just watching what’s happening now, we are shaping it too. This real-time AI system turns raw data into instant intelligence, whether it’s spotting unusual patterns, boosting recommendation systems, or guiding operational choices. As information flows faster, those who can respond to it will lead the way. So, connect to the data stream, let our models think, and breathe life into our dashboards. Of course, there are numerous technical problems to solve, starting from data cleansing to the deployment strategy with the right architectural approach.

Thank you for reading! If you found this article valuable, please consider liking and sharing it.

Written by

Gautam Goswami

[…] by time, usage, and processing stages. Hot data is first treated in streaming systems (such as Flink or Spark Streaming) and may be enriched or aggregated. After it serves its original purpose, it is […]