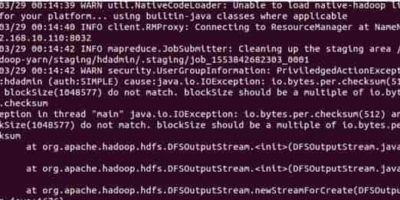

How checksum smartly manages data integrity in HDFS (Hadoop Distributed File System).

Ensuring data integrity is basic necessity or back bond in big data processing environment to achieve accurate outcome. Of course, same is applicable while executing any data moving operations with […]

Manual procedure to add a new Datanode into an existing basic data lake without Apache Ambari or Cloudera Manager. Constructed using HDFS (Hadoop Distributed File System) on the multi-node cluster

The aim of this article is to highlight the essential steps when there would be a need for a new DataNode into an exiting multi-node Hadoop cluster. Midsize or startup […]